In this project, I worked with a group on training a deep learning model to enable a PiCar to autonomously follow lane markings using camera input. This project was completed as part of a fundamental deep learning course and served as an introduction to applying convolutional neural networks (CNNs) to real-world robotic control tasks.

The focus of the project was on designing, training, and evaluating a neural network that could map camera images directly to steering commands. At the end of this project, our group presented our model through a live demonstration.

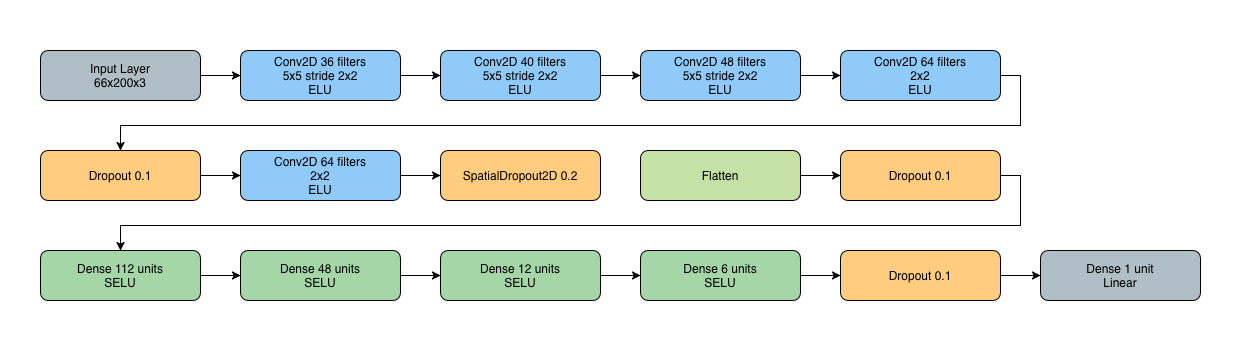

Model Architecture

We built and trained a custom convolutional neural network using TensorFlow and Keras. We chose a model that was a good tradeoff between size (as it had to fit on the onboard SBC) and accuracy. We ended up producing a model with the following features.

- 5 Conv2D layers for feature extraction

- 5 Dense layers (including the final output) for control output

- Approximately 270,000 trainable parameters

Through experimentation, we found that:

- ELU activations worked best for all convolutional layers

- SELU activations worked best for the dense layers

- The final output layer uses a linear activation for regression

To reduce overfitting, we then included four Dropout layers, one of which was a SpatialDropout layer.

Training Setup

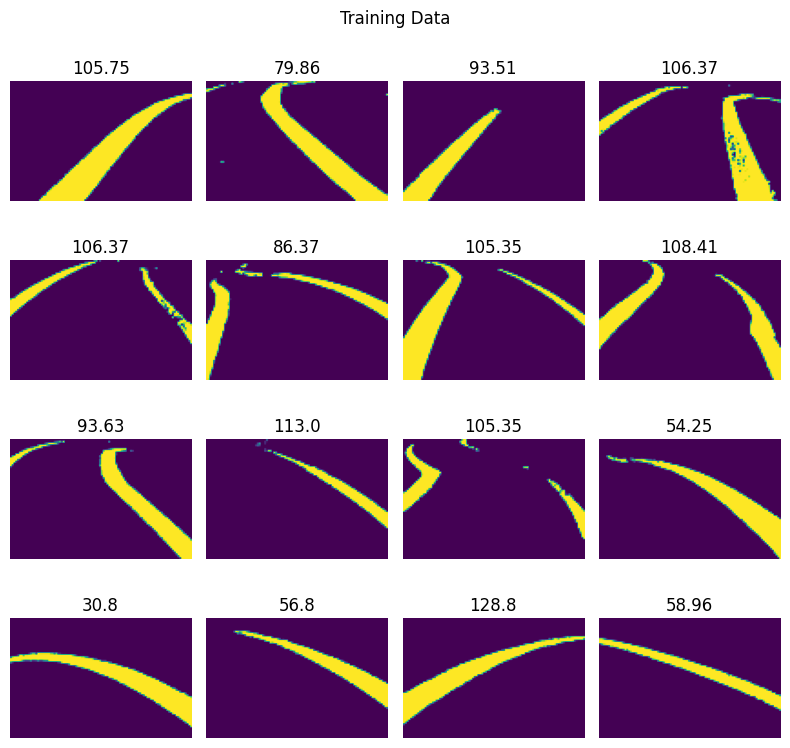

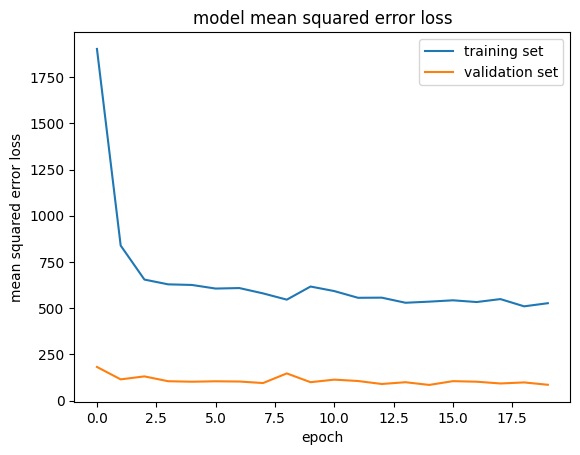

Once our layers were setup, the model was trained for 20 epochs using a learning rate of 0.001 with data from precaptured camera images as the input and a steering angle as the output label.

The final trained model was saved as an .h5 file with a size of approximately 3 MB, making it lightweight enough for deployment on embedded hardware.

Performance and Observations

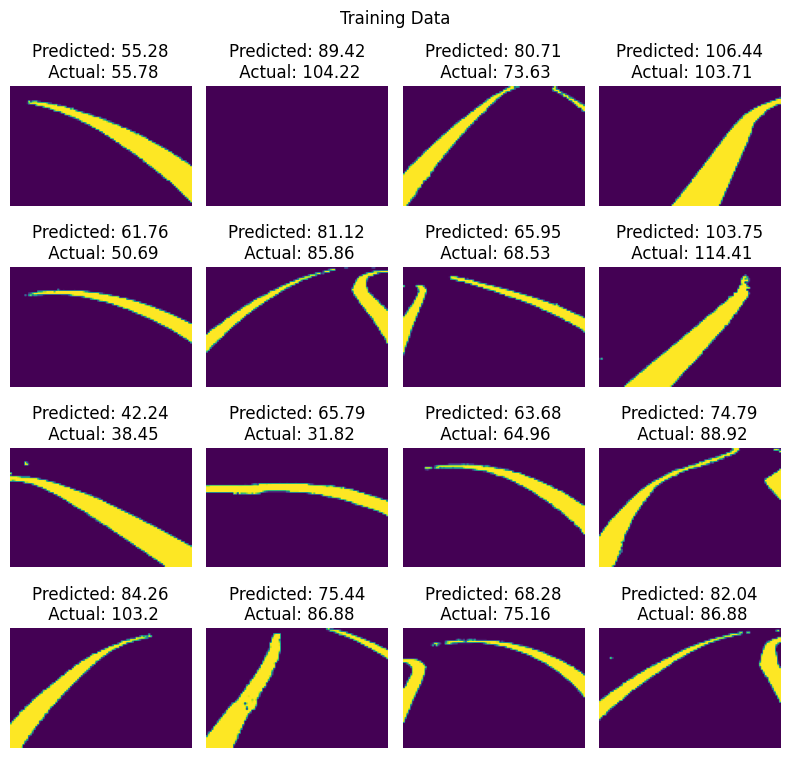

The best-performing model achieved a validation loss of approximately 84, with occasional runs reaching values closer to 80. Earlier versions of the model achieved lower validation loss, but changes in the dataset introduced a bias that caused poor right-turn predictions.

After retraining with corrected data, the model showed very accurate left turn predictions however it would occasional fail on right turns, primarily due to dataset imbalance.

During lab demonstrations, the PiCar was able to follow lanes reliably in most scenarios, with performance limitations clearly tied to the balance of the training data being skewed towards left turns.

What I’d Improve Next

If I were to continue this project, I would focus primarily on improving the dataset. Collecting more balanced training data, especially for right turns, would likely improve consistency.

I would also experiment with:

- Data augmentation (better masking techniques, brightness changes, etc.)

- Temporal models that incorporate multiple frames instead of a single image

- Additional regularization or alternative loss functions

These changes would probably improve this model significantly however I think our model, even without these improvements, was still an excellent model for this application.